The murder of George Floyd, another Black American lost to police violence, has once again thrust race into the national conversation. Tell me if I’m being overly optimistic, but I have hope that this heightened awareness might be here to stay. Many have called out one-off social media posts as virtue signaling; link to an woke insta thread on how to be an ally as a non-Black POC, and then it’s back to self-timer pics about being bored in quarantine :/

Sorry–that was unnecessarily combative. I actually think social media posts are pretty useful. For most people, posting to a story is their first real tango with activism, and even that small step should be celebrated. Still, recent buzz on social media isn’t enough to convince me that America finally cares, even when no one’s watching.

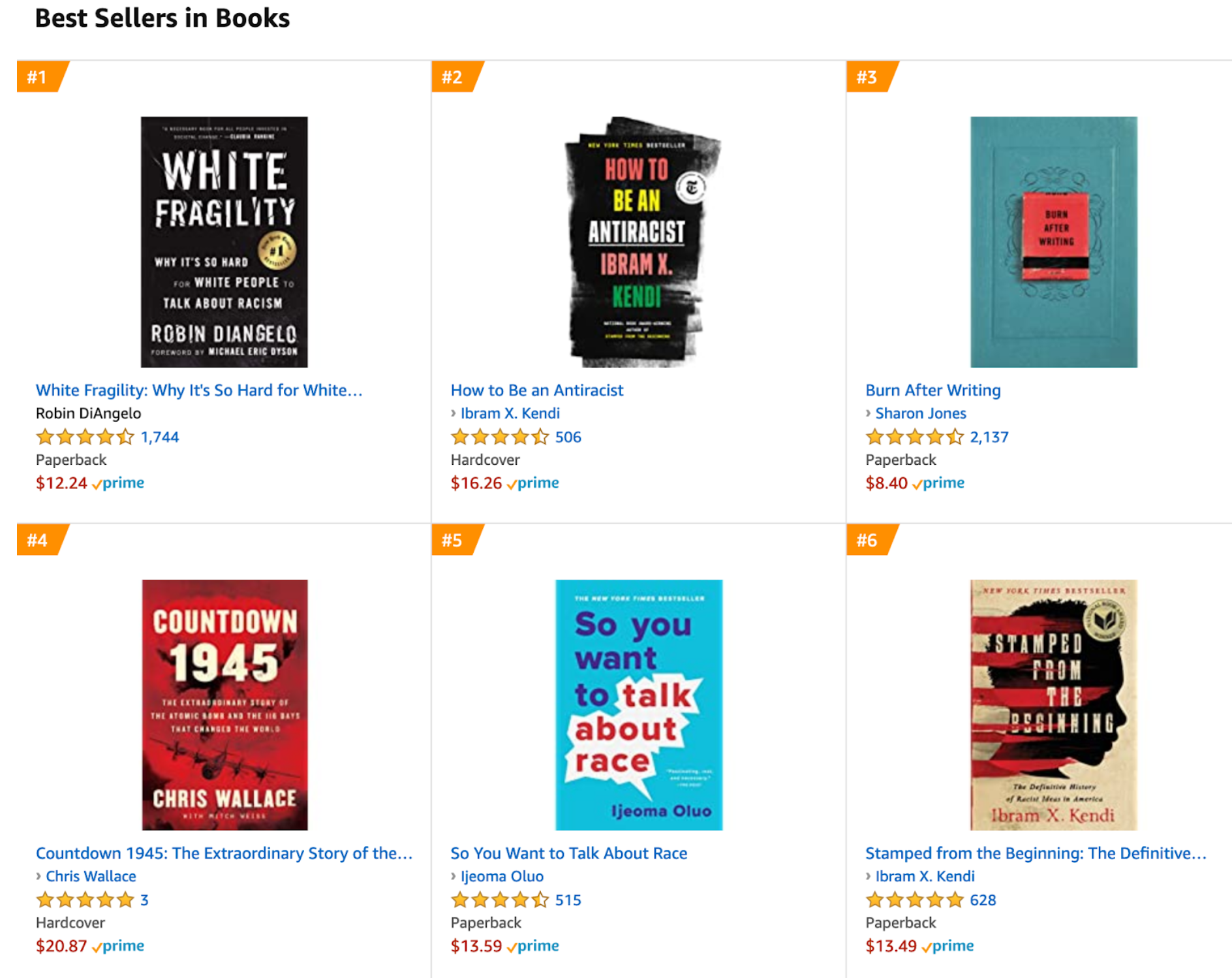

Rather, this is:

I guess millennials decided that writing down their hot takes privately is the only way not to get cancelled (#3), and boomers still love WW2 (#4). But four out of Amazon’s top six bestselling books are about race. That’s pretty cool. For every 1000 people that see your Tweet, maybe one sees your bookshelf, so maybe this is less about virtue signalling and more that non-Black people actually want to educate themselves.

I’m especially excited to see so much attention towards Dr. Ibram Kendi’s writing on antiracism, which I first found a few months ago doing research for a term paper. Kendi argues that without explicit rejection of anti-Black sentiment, racist ideology will find its way into all Americans. He likens the proliferation of racism to tumor metastasis, an analogy motivated by the stage IV colon cancer threatening his own life.

To illustrate how racism spreads, Kendi often cites his own experiences growing up Black near the turn of the century. Despite having won civil rights, many African-Americans remained jobless on the streets or locked away in jail, unable to care for their children. The country taught Black teenagers that this was their fault: they dropped out of school, they used too many drugs, and they were absentee parents. Drowned in all this rhetoric, Black children like Kendi were indoctrinated to believe that systemic issues were their individual problems.

America’s made some progress since then. Now, we’re indoctrinated to believe that systemic racism is individual racism. “Derek Chauvin was racist, but not all cops.” We acknowledge the explicit bias of police brutality or the implicit bias of doctors not taking African-American patients’ pain seriously. Decades of psychology research on snap judgments and stereotypes paint racism as a failure of the individual, concealing structural marginalization under the guise of “being human”.

So while we’re busy figuring how to not be racist, let algorithms make the sensitive decisions, some say. Police might accidentally over-target certain groups, so PredPol can decide when and where to send police. Doctors might accidentally under-diagnose African-American patients, so let machine learning figure it out. Unfortunately, primitive empirical analyses have already shown that most of these algorithms are overtly racist.

Enter the well-meaning group of artificial intelligence (AI) researchers hoping to use AI for social good. They see algorithmic racism as statistics that differ between groups, and so racial equity is as simple as equalizing these statistics. For example, facial recognition technology works like a charm for white men, but is woefully inaccurate for Black women. Once accuracy is matched across all groups, the algorithm is “fair”, and therefore ready for deployment.

In the sphere of criminal justice, things get a little more complicated. For example, many researchers have worked on de-biasing algorithms used to assess a defendant’s risk before their trial. This line of work has two major pitfalls. One, a ‘fair’ algorithm deployed in a racist environment is a racist algorithm. A theoretical false positive rate won’t save a Black man in Kentucky from being searched without warrant and subjected to the fate of a plantation-owner-descended white judge. Still, some argue that “even imperfect algorithms can improve” criminal justice – we should use computers as long as they’re more accurate and less biased than judges.

Seems reasonable enough, until we consider the second, more important pitfall: we calculate accuracy or fairness based on existing data, data which merely reflect structural effects. As civil rights organizations argue, crime data “primarily document the behavior and decisions of police officers and prosecutors, rather than the individuals or groups that the data are claiming to describe.” Decades of research have proven that groups are policed differently; consider, for example, the legally-codified discriminatory prosecution of crack vs. powder cocaine from the 80s through the Fair Sentencing Act (2010). An ‘accurate’, ‘fair’ algorithm would detain African-Americans for less severe crimes than white Americans are released for.

Unfortunately, it seems like some AI researchers have missed the point here. Instead of wondering about dataset epistemology, Richard Berk argues that accurate algorithms should perform differently for different groups, to account for their varying “base rates” of criminal activity. These base rates (typically calculated from arrest data) are uncritically portrayed as universal truths inherent to a group’s existence. In this framing, Black people are fundamentally riskier than white people, and deserve to be algorithmically handled as such. Corbett-Davies et al. further conflate a more accurate algorithm with a safer society, rather than one in which certain groups are overpoliced because we think they’re dangerous.

Algorithms reflect biases in their data. This isn’t an original thought, but it’s important to know exactly what that means in a criminal justice context. To be clear, we don’t solve this issue with more data on minority groups – the critique here is that the data itself was produced by institutionalized racism. Issues acknowledged, computational social scientists have started to take an abolitionist approach, calling for moratoriums on AI systems in law enforcement. Maybe the best way to do AI for social good is to acknowledge that AI isn’t ready for social good. But can data science positively impact criminal justice? Chelsea Barabas (from MIT Media Lab) and colleagues argue that instead of studying the marginalized, we should look where the power lies. This means, for example, identifying judges or counties that systematically over-incarcerate African-Americans. By studying structures rather than individuals, we can identify how criminality is created unevenly and make change from the top-down.

To sociologists, all this recent fuss about AI is nothing new. AI carries modern cachet, but it’s that same ‘scientific legitimacy’ that has long justified anti-Black criminal justice practice. Khalil Gibran Muhammad chronicles this evolution in his The Condemnation of Blackness. In the 1890s, Frederick Hoffman referenced racial health and crime data to prove that Black people were more diseased and dangerous than white people, and therefore deserved to die or be jailed. In the 1990s, Rudy Giuliani justified racial profiling by citing minority arrest & incarceration rates: ‘that’s where the crime is’ (and Bloomberg still espouses similar ideas). AI is the latest incarnation of this self-fulfilling prophecy: of course, African-Americans are more likely to predicted criminal, because of the increased ‘base rates’ in their group.

Understanding systemic racism is hard. In 2000, high school senior Kendi certainly didn’t. During his speech for a public speaking contest, Kendi told his audience about all the irresponsible choices Black youths make that cause their poor outcomes in life. He ignored the roles of the education, health, and criminal justice systems that force them to fail. With or without algorithms, let’s devote our attention towards structures, not individuals, that manufacture modern oppression. Once we do, we can finally start tearing them apart.